W/o realism guidance

Without realism guidance, the model cannot amend the rest of the joints, yielding unreal and incoherent motions.

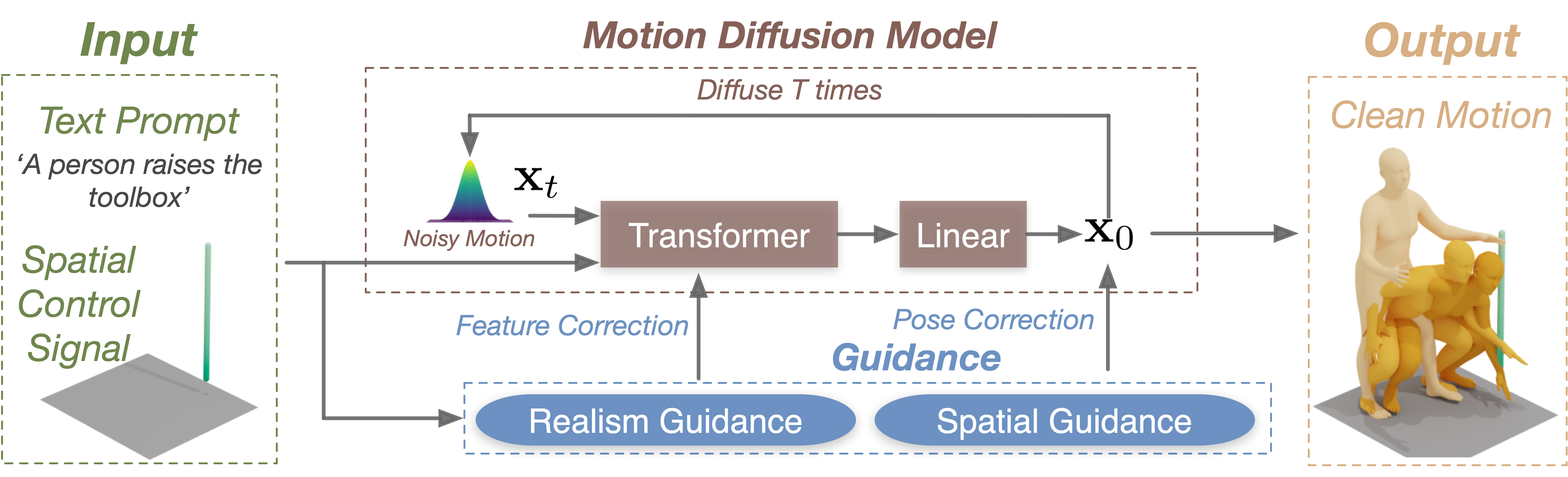

We present a novel approach named OmniControl for incorporating flexible spatial control signals into a text-conditioned human motion generation model based on the diffusion process. Unlike previous methods that can only control the pelvis trajectory, OmniControl can incorporate flexible spatial control signals over different joints at different times with only one model. Specifically, we propose analytic spatial guidance that ensures the generated motion can tightly conform to the input control signals. At the same time, realism guidance is introduced to refine all the joints to generate more coherent motion. Both the spatial and realism guidance are essential and they are highly complementary for balancing control accuracy and motion realism. By combining them, OmniControl generates motions that are realistic, coherent, and consistent with the spatial constraints. Experiments on HumanML3D and KIT-ML datasets show that OmniControl not only achieves significant improvement over state-of-the-art methods on pelvis control but also shows promising results when incorporating the constraints over other joints.

Our model generates human motions from the text prompt and spatial control signal. At the denoising diffusion step, the model takes the text prompt and a noised motion sequence as input and estimates the clean motion. To incorporate flexible spatial control signals into the generation process, a hybrid guidance, consisting of realism and spatial guidance, is used to encourage motions to conform to the control signals while being realistic.

Text prompt: a person plays a violin with their left hand in the air and their right hand holding the bow.

Without realism guidance, the model cannot amend the rest of the joints, yielding unreal and incoherent motions.

Without spatial guidance, the generated motion cannot tightly follow the spatial constraints.

We report the performance of a variant which computes the gradient w.r.t the input noised motion in spatial guidance.

The visualization validates that our full model is much more effective in terms of both realism and controlling accuracy.